This example demonstrates the use of the extended MHD module in OFT. In this example reconnection in a periodic-slab current sheet will be simulated following the setup used to the GEM Challange.

Code Walk Through

The code consists of two components. First, a helper module is defined that includes class implementations and other components for initialization and post-processing and the main run program.

Code Walk Through

As usual, we start with a few module imports for required functionality.

MODULE gem_helpers

IMPLICIT NONE

Classes and subroutines used for synthetic diagnostics.

Definition diagnostic.F90:16

FEM utility classes and functions.

Definition fem_utils.F90:16

Plasma parameters and evaluation routines.

Definition mhd_utils.F90:14

real(r8), parameter mu0

- Permeability of free-space

Definition mhd_utils.F90:18

Base environment functions and aliases for Open FUSION Toolkit.

Definition oft_base.F90:23

FE operators for full H(Curl) + Grad(H^1) space.

Definition hcurl_grad_operators.F90:27

HDF5 file manipulation for output and restart data.

Definition oft_io.F90:19

Abstract vector and matrix interfaces.

Definition lin_alg_base.F90:20

Lagrange FE operator definitions.

Definition lagrange_operators.F90:25

Module for modeling extended MHD with a mixed conforming/lagrange basis set.

Definition xmhd.F90:73

type(oft_ml_fem_comp_type), pointer, public xmhd_ml_hcurl_grad

Definition xmhd.F90:395

type(oft_ml_fem_type), pointer, public xmhd_ml_hcurl

Definition xmhd.F90:394

Synthetic flux diagnostic.

Definition diagnostic.F90:65

Base class for interpolation of a FE field.

Definition fem_utils.F90:29

Interpolate a H(Curl) + Grad(H^1) vector field.

Definition hcurl_grad_operators.F90:58

Binary output file object.

Definition oft_io.F90:27

Abstract vector class.

Definition lin_alg_base.F90:69

Interpolate a Lagrange field.

Definition lagrange_operators.F90:53

Forcing object class for xMHD.

Definition xmhd.F90:129

Probe object class for xMHD.

Definition xmhd.F90:145

Object for sub-fields used by oft_xmhd_push and oft_xmhd_pop.

Definition xmhd.F90:245

Class definitions

Now we define a class for generating the initial condition through an implementation of fem_interp for the current sheet and perturbation field. Additionally, we define a class by extending the abstract oft_xmhd_probe class, which will be used during post-processing (xmhd_plot) to evaluate the reconnected flux as a function of time.

REAL(8) :: dpsi = 1.d-1

REAL(8) :: den_inf = 2.d-1

REAL(8) :: lam = 0.5d0

REAL(8) :: Lx = 25.6

REAL(8) :: Lz = 12.8

CHARACTER(LEN=2) :: field = 'n'

CONTAINS

PROCEDURE :: interp => gem_interp_apply

END TYPE gem_interp

INTEGER(4) :: io_unit

LOGICAL :: initialized = .false.

TYPE(oft_hcurl_grad_rinterp), POINTER :: Bfield => null()

TYPE(flux_probe) :: flux_probe

TYPE(oft_bin_file) :: flux_hist

CONTAINS

PROCEDURE :: apply => gem_probe_apply

END TYPE gem_probe

contains

Initial condition field interpolator

To set the initial conditions we define a single interpolation object/function, with a switch for each of the fields with non-uniform initial distributions. Uniform fields are set in the main run program as they do not require FE projection.

The non-uniform initial conditions for this case is given by

\[ n_0 = (cosh(z / \lambda))^{-2} + n_{\infty} \]

\[ B_0 = tanh(z / \lambda) \hat{x} \]

The perturbation is then given by

\[ \delta B = -\frac{\pi}{L_z} cos(2 \pi x / L_x) sin(\pi z / L_z) \hat{x} + \frac{2 \pi}{L_x} sin(2 \pi x / L_x) cos(\pi z / L_z) \hat{x} \]

SUBROUTINE gem_interp_apply(self,cell,f,gop,val)

CLASS(GEM_interp), INTENT(inout) :: self

INTEGER(4), INTENT(in) :: cell

REAL(8), INTENT(in) :: f(:)

REAL(8), INTENT(in) :: gop(3,4)

REAL(8), INTENT(out) :: val(:)

REAL(8) :: pt(3),Beq(3),Bper(3)

pt = self%mesh%log2phys(cell,f)

SELECT CASE(trim(self%field))

CASE('n0')

val = 1.d0/(cosh(pt(3)/self%lam))**2 + self%den_inf

CASE('b0')

beq = (/tanh(pt(3)/self%lam), 0.d0, 0.d0/)

val = beq

CASE('db')

bper(1) = -pi*cos(2.d0*pi*pt(1)/self%Lx)*sin(pi*pt(3)/self%Lz)/self%Lz

bper(2) = 0.d0

bper(3) = 2.d0*pi*sin(2.d0*pi*pt(1)/self%Lx)*cos(pi*pt(3)/self%Lz)/self%Lx

val = bper

CASE DEFAULT

CALL oft_abort(

'Unknown field component',

'GEM_interp_apply',__file__)

END SELECT

END SUBROUTINE GEM_interp_apply

subroutine oft_abort(error_str, sname, fname)

Graceful abort for Open FUSION Toolkit.

Definition oft_base.F90:404

Reconnected flux probe

Arbitrary signals can be computed for post-processing by passing an implementation of oft_xmhd_probe to xmhd_plot. In this case we compute the flux in the half plane ( \( z>0; x=0 \)), which can then be used to compute the "reconnected flux" as \( \Psi(t_0) - Psi(t) \). On the first call a flux_probe object is initialized and used on subsequent calls, whose result it save to a history file.

SUBROUTINE gem_probe_apply(self,sub_fields,t)

CLASS(GEM_probe), INTENT(inout) :: self

type(xmhd_sub_fields), intent(inout) :: sub_fields

REAL(8), INTENT(in) :: t

REAL(8) :: tflux

REAL(4), DIMENSION(2) :: output

IF(.NOT.self%initialized)THEN

ALLOCATE(self%Bfield)

self%flux_probe%hcpc=(/0.d0, 0.d0, 8.d0/)

self%flux_probe%hcpv=(/0.125d0, 0.0d0, 0.0d0/)

CALL self%flux_probe%setup

self%flux_probe%B=>self%Bfield

self%flux_hist%filedesc = 'GEM example reconnected flux'

CALL self%flux_hist%setup('gem_flux.hist')

CALL self%flux_hist%add_field('time', 'r4', desc="Simulation time [s]")

CALL self%flux_hist%add_field('flux', 'r4', desc="Reconnected flux [Wb]")

CALL self%flux_hist%write_header

END IF

self%initialized=.true.

END IF

self%Bfield%u=>sub_fields%B

CALL self%flux_probe%eval(tflux)

output=real([t,tflux],4)

CALL self%flux_hist%open

CALL self%flux_hist%write(data_r4=output)

CALL self%flux_hist%close

END IF

END SUBROUTINE gem_probe_apply

END MODULE GEM_helpers

type(oft_env_type) oft_env

Global container for environment information.

Definition oft_base.F90:145

Driver program

With supporting infrasturcture defined, we now follow a similar structure to MUG Example: Spheromak Tilt and MUG Example: Spheromak Heating for the main run program.

PROGRAM mug_slab_recon

USE gem_helpers, ONLY: gem_interp, gem_probe

IMPLICIT NONE

Multigrid initialization using nested meshes.

Definition multigrid_build.F90:14

subroutine multigrid_construct(mg_mesh, grnd_pt)

Construct multi-level mesh.

Definition multigrid_build.F90:440

Multi-Level grid implementation using nested meshes.

Definition multigrid.F90:14

Base scalar H^1 FE class and basis evaluation.

Definition h1_basis.F90:22

subroutine oft_h1_setup(mg_mesh, order, ml_h1_obj, ml_bh1_obj, minlev)

Construct H^1 scalar FE on each mesh level.

Definition h1_basis.F90:99

H^1 FE operator definitions.

Definition h1_operators.F90:22

subroutine h1_setup_interp(ml_h1_rep)

Construct interpolation matrices for transfer between H^1 finite element spaces.

Definition h1_operators.F90:551

Base H(Curl) FE class and basis evaluation.

Definition hcurl_basis.F90:22

subroutine oft_hcurl_grad_setup(ml_hcurl_obj, ml_h1_obj, ml_hcurl_grad_obj, ml_h1grad_obj, minlev)

Construct a vector FE space for H(Curl) and it's compliment ( )

Definition hcurl_basis.F90:176

subroutine oft_hcurl_setup(mg_mesh, order, ml_hcurl_obj, ml_bhcurl_obj, minlev)

Construct H(Curl) FE basis on each mesh level.

Definition hcurl_basis.F90:104

subroutine oft_hcurl_grad_project(hcurl_grad_rep, field, x)

Project a vector field onto a H(Curl) + Grad(H^1) basis.

Definition hcurl_grad_operators.F90:1122

subroutine hcurl_grad_setup_interp(ml_hcurl_aug_rep, ml_h1_rep, create_full)

Construct interpolation matrices on each MG level.

Definition hcurl_grad_operators.F90:1393

subroutine hcurl_grad_getmop(hcurl_grad_rep, mat, bc)

Construct mass matrix for a H(Curl) + Grad(H^1) representation.

Definition hcurl_grad_operators.F90:961

H(Curl) FE operator definitions.

Definition hcurl_operators.F90:29

subroutine hcurl_setup_interp(ml_hcurl_rep)

Construct interpolation matrices on each MG level.

Definition hcurl_operators.F90:1108

Base Lagrange FE class and basis evaluation.

Definition lagrange_basis.F90:22

subroutine oft_lag_setup(mg_mesh, order, ml_lag_obj, ml_blag_obj, ml_vlag_obj, minlev)

Construct lagrange scalar FE on each mesh level.

Definition lagrange_basis.F90:317

subroutine oft_lag_getmop(fe_rep, mat, bc)

Construct mass matrix for Lagrange scalar representation.

Definition lagrange_operators.F90:770

subroutine oft_lag_vproject(fe_rep, field, x)

Project a vector field onto a lagrange basis.

Definition lagrange_operators.F90:1402

subroutine oft_lag_vgetmop(vlag_rep, mat, bc)

Construct mass matrix for Lagrange vector representation.

Definition lagrange_operators.F90:1229

subroutine lag_setup_interp(ml_lag_rep, ml_vlag_rep)

Construct interpolation matrices on each MG level.

Definition lagrange_operators.F90:1503

subroutine oft_lag_project(fe_rep, field, x)

Project a scalar field onto a lagrange basis.

Definition lagrange_operators.F90:1127

Abstract solver interfaces and select native implementations.

Definition solver_base.F90:32

Matrix and vector management routines.

Definition solver_utils.F90:14

subroutine create_ilu_pre(pre)

Create ILU(0) preconditioner (native or MKL)

Definition solver_utils.F90:633

subroutine create_diag_pre(pre)

Needs docs.

Definition solver_utils.F90:619

subroutine create_bjacobi_pre(pre, nlocal)

Needs docs.

Definition solver_utils.F90:647

subroutine create_cg_solver(solver, force_native)

Needs docs.

Definition solver_utils.F90:437

subroutine, public xmhd_lin_run(equil_fields, pert_fields, escale)

Main driver subroutine for extended MHD time advance.

Definition xmhd.F90:1101

integer(i4), public xmhd_minlev

Lowest MG level.

Definition xmhd.F90:363

real(r8), public temp_floor

Temperature floor.

Definition xmhd.F90:351

real(r8), public den_floor

Density floor.

Definition xmhd.F90:350

real(r8), public den_scale

Density scale factor.

Definition xmhd.F90:347

subroutine, public xmhd_run(initial_fields, driver, probes, profile_only)

Main driver subroutine for extended MHD time advance.

Definition xmhd.F90:554

type(oft_ml_fem_type), pointer, public xmhd_ml_lagrange

Definition xmhd.F90:391

type(oft_ml_fem_comp_type), pointer, public xmhd_ml_vlagrange

Definition xmhd.F90:396

type(oft_ml_fem_type), pointer, public xmhd_ml_h1

Definition xmhd.F90:392

subroutine, public xmhd_plot(probes)

Plotting subroutine for xMHD post-processing.

Definition xmhd.F90:4562

type(oft_ml_fem_type), pointer, public xmhd_ml_h1grad

Definition xmhd.F90:393

Multigrid meshes and ML context structure.

Definition multigrid.F90:44

Interpolate of a H(Curl) + Grad(H^1) vector field.

Definition hcurl_grad_operators.F90:80

Needs docs.

Definition hcurl_grad_operators.F90:153

Information for XDMF plotting groups in HDF5 plot file.

Definition oft_io.F90:77

Abstract matrix class.

Definition lin_alg_base.F90:566

Base class for OFT solvers.

Definition solver_base.F90:99

Local Variables

Next we define the local variables needed to initialize our case and run the time-dependent solve and post-processing. Compared to previous examples, we now have specialized initial condition and post-processing objects.

REAL(8), PARAMETER :: N0 = 5.196374d16

REAL(8), PARAMETER :: T0 = 1.d2

REAL(8), PARAMETER :: B0 = 0.002045692328575d0

CLASS(oft_solver), POINTER :: minv => null()

CLASS(oft_matrix), POINTER :: mop => null()

CLASS(oft_vector), POINTER :: u,v

TYPE(multigrid_mesh) :: mg_mesh

TYPE(xdmf_plot_file) :: plot_file

TYPE(xmhd_sub_fields) :: ic_fields,pert_fields

TYPE(oft_hcurl_grad_gzerop), TARGET :: hcurl_grad_gzerop

TYPE(oft_hcurl_grad_rinterp), TARGET :: bfield

TYPE(oft_hcurl_grad_cinterp), TARGET :: jfield

TYPE(GEM_interp), TARGET :: GEM_field

TYPE(GEM_probe) :: GEM_probes

REAL(8), POINTER :: vals(:),vec_vals(:,:)

INTEGER(4) :: i,ierr,io_unit

INTEGER(4) :: order = 2

INTEGER(4) :: minlev = 1

REAL(8) :: db = 1.d-1

LOGICAL :: pm = .false.

LOGICAL :: linear = .false.

LOGICAL :: plot_run = .false.

LOGICAL :: view_ic = .false.

NAMELIST/slab_recon_options/order,minlev,linear,db,plot_run,view_ic,pm

OFT Initialization

See API Example 1: Helmholtz solve for a detailed description of calls in this section.

OPEN(newunit=io_unit,file=

oft_env%ifile)

READ(io_unit,slab_recon_options,iostat=ierr)

CLOSE(io_unit)

IF(view_ic)THEN

CALL plot_file%setup("gem")

CALL mg_mesh%mesh%setup_io(plot_file,order)

END IF

subroutine oft_init(nthreads)

Initializes Open FUSION Toolkit run environment.

Definition oft_base.F90:193

Perform post-processing

To visualize the solution fields once a simulation has completed the xmhd_plot subroutine is used. This subroutine steps through the restart files produced by xmhd_run and generates plot files, and in our case probe signals for the half-plane flux at evenly spaced points in time as specified in the input file, see xmhd_plot and Post-Processing options.

IF(plot_run)THEN

END IF

subroutine oft_finalize()

Finalize Open FUSION Toolkit environment.

Definition oft_base.F90:356

Initial conditions

For this simulation we set every field using either uniform fields or using our interpolation object. As all non-zero uniform fields utilize a nodel basis, they can be set simply using set() on the relevant vector object. For non-uniform fields, we use the usual projection times mass inverse method to map the field onto a given FE representation.

CALL xmhd_ml_lagrange%vec_create(ic_fields%Ti)

CALL ic_fields%Ti%set(t0)

CALL xmhd_ml_vlagrange%vec_create(ic_fields%V)

CALL ic_fields%V%set(0.d0)

gem_field%mesh=>mg_mesh%mesh

NULLIFY(mop)

CALL oft_lag_getmop(xmhd_ml_lagrange%current_level,mop,"none")

CALL create_cg_solver(minv)

minv%A=>mop

minv%its=-2

CALL create_diag_pre(minv%pre)

CALL xmhd_ml_lagrange%vec_create(u)

CALL xmhd_ml_lagrange%vec_create(v)

gem_field%field='n0'

CALL oft_lag_project(xmhd_ml_lagrange%current_level,gem_field,v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

CALL xmhd_ml_lagrange%vec_create(ic_fields%Ne)

CALL ic_fields%Ne%add(0.d0,1.d0,u)

CALL ic_fields%Ne%scale(n0)

CALL u%delete

CALL v%delete

CALL mop%delete

DEALLOCATE(u,v,mop)

CALL minv%pre%delete

DEALLOCATE(minv%pre)

CALL minv%delete

DEALLOCATE(minv)

NULLIFY(mop)

CALL hcurl_grad_getmop(xmhd_ml_hcurl_grad%current_level,mop,"none")

CALL create_cg_solver(minv)

minv%A=>mop

minv%its=-2

CALL create_bjacobi_pre(minv%pre,-1)

DEALLOCATE(minv%pre%pre)

CALL create_ilu_pre(minv%pre%pre)

CALL xmhd_ml_hcurl_grad%vec_create(u)

CALL xmhd_ml_hcurl_grad%vec_create(v)

gem_field%field='b0'

CALL oft_hcurl_grad_project(xmhd_ml_hcurl_grad%current_level,gem_field,v)

CALL hcurl_grad_gzerop%apply(v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

CALL xmhd_ml_hcurl_grad%vec_create(ic_fields%B)

CALL ic_fields%B%add(0.d0,1.d0,u)

CALL ic_fields%B%scale(b0)

gem_field%field='db'

CALL oft_hcurl_grad_project(xmhd_ml_hcurl_grad%current_level,gem_field,v)

CALL hcurl_grad_gzerop%apply(v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

CALL xmhd_ml_hcurl_grad%vec_create(pert_fields%B)

CALL pert_fields%B%add(0.d0,1.d0,u)

CALL pert_fields%B%scale(b0*db)

CALL u%delete

CALL v%delete

CALL mop%delete

DEALLOCATE(u,v,mop)

CALL minv%pre%pre%delete

DEALLOCATE(minv%pre%pre)

CALL minv%pre%delete

DEALLOCATE(minv%pre)

CALL minv%delete

DEALLOCATE(minv)

Plot initial conditions

We also add the ability to visualize the initial conditions before running the simulation to verify expected distribution.

IF(view_ic)THEN

NULLIFY(vals)

ALLOCATE(vec_vals(3,ic_fields%Ne%n))

CALL ic_fields%Ne%get_local(vals)

CALL mg_mesh%mesh%save_vertex_scalar(vals,plot_file,'N0')

CALL ic_fields%Ti%get_local(vals)

CALL mg_mesh%mesh%save_vertex_scalar(vals,plot_file,'T0')

DO i=1,3

vals=>vec_vals(i,:)

CALL ic_fields%V%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'V0')

NULLIFY(mop)

CALL oft_lag_vgetmop(xmhd_ml_vlagrange%current_level,mop,"none")

CALL create_cg_solver(minv)

minv%A=>mop

minv%its=-2

CALL create_diag_pre(minv%pre)

CALL xmhd_ml_vlagrange%vec_create(u)

CALL xmhd_ml_vlagrange%vec_create(v)

bfield%u=>ic_fields%B

CALL bfield%setup(xmhd_ml_hcurl_grad%current_level)

CALL oft_lag_vproject(xmhd_ml_lagrange%current_level,bfield,v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

DO i=1,3

vals=>vec_vals(i,:)

CALL u%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'B0')

bfield%u=>pert_fields%B

CALL bfield%setup(xmhd_ml_hcurl_grad%current_level)

CALL oft_lag_vproject(xmhd_ml_lagrange%current_level,bfield,v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

DO i=1,3

vals=>vec_vals(i,:)

CALL u%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'dB')

jfield%u=>ic_fields%B

CALL jfield%setup(xmhd_ml_hcurl_grad%current_level)

CALL oft_lag_vproject(xmhd_ml_lagrange%current_level,jfield,v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

DO i=1,3

vals=>vec_vals(i,:)

CALL u%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'J0')

CALL u%delete

CALL v%delete

CALL mop%delete

DEALLOCATE(u,v,mop)

CALL minv%pre%delete

DEALLOCATE(minv%pre)

CALL minv%delete

DEALLOCATE(minv)

END IF

Run simulation

Finally, the simulation can be run using either the driver routine for linear (xmhd_lin_run) or non-linear MHD (xmhd_run). These routines both advance the solution in time with the physics specified in the input file, see the documentation for xmhd_lin_run and xmhd_run, and produces restart files that contain the solution at different times.

Several quantities are also recorded to a history file xmhd.hist during the simulation. The data in the history file may be plotted using the script plot_mug_hist.py, which is located in src/utilities/scripts or python directories for the repository and an installation respectively.

- Note

- OFT plotting scripts require the python packages

numpy and matplotlib as well as path access to the python modules provided in python for installations or src/utilities in the repo.

xmhd_minlev=minlev

den_scale=n0

oft_env%pm=pm

IF(linear)THEN

CALL xmhd_ml_vlagrange%vec_create(pert_fields%V)

CALL xmhd_ml_lagrange%vec_create(pert_fields%Ti)

CALL xmhd_ml_lagrange%vec_create(pert_fields%Ne)

CALL xmhd_lin_run(ic_fields,pert_fields)

ELSE

CALL ic_fields%B%add(1.d0,1.d0,pert_fields%B)

temp_floor=t0*1.d-2

den_floor=n0*1.d-2

CALL xmhd_run(ic_fields)

END IF

END PROGRAM MUG_slab_recon

Input file

Below is an input file which can be used with this example in a parallel environment. As with MUG Example: Spheromak Tilt and MUG Example: Spheromak Heating this example should only be run with multiple processes. Some annotation of the options is provided inline below, for more information on the available options in the xmhd_options group see xmhd_run.

&runtime_options

ppn=1 ! Number of processors/tasks per node (used for heirarchical domain decomposition)

debug=0 ! Debug level (0-3)

/

&mesh_options

meshname='slab' ! Meshname (unused at present)

cad_type=1 ! Mesh format type (1 -> native)

nlevels=2 ! Number of total grid levels (see docs for more information)

nbase=1 ! Number grid levels before domain decomposition (see docs for more information)

part_meth=2 ! Partition "uniformly" along x-axis

/

&t3d_options

filename='slab_gem.t3d'

inpname='slab_gem.inp'

reflect='xyz' ! Reflect input grid in all directions

ref_per=T,T,F ! Make grid periodic in the X,Y directions

/

&slab_recon_options

order=2 ! FE order

minlev=2 ! Minimum level for MG preconditioning

linear=F ! Perform linear simulation?

view_ic=F ! View initial conditions but do not run simulation

plot_run=F ! Run plotting instead of simulation

pm=F ! View extended linear and non-linear iteration output?

/

&xmhd_options

mu_ion=1. ! Ion mass (atomic units)

xmhd_ohmic=T ! Include Ohmic heating

xmhd_visc_heat=T ! Include viscous heating

xmhd_hall=F ! Include Hall terms?

bbc='bc' ! Perfectly-conducting BC for B-field

vbc='all' ! Zero-flow BC for velocity

nbc='n' ! Neumann BC for density

tbc='n' ! Neumann BC for temperature

dt=8.e-7 ! Maximum time step

eta=742.6 ! Constant resistivity

! eta_hyper=50.0 ! Hyper-resistivity

! me_factor=73.446 ! M_e/M_i = 25.0 (as per GEM challenge paper)

visc_type='iso' ! Use isotropic viscosity tensor

nu_par=7425.9 ! Fluid viscosity

d_dens=50. ! Density diffusion

kappa_par=2943.4 ! Parallel thermal conduction (fixed)

kappa_perp=2943.4 ! Perpendicular thermal conduction (fixed)

nsteps=1000 ! Number of time steps to take

rst_ind=0 ! Index of file to restart from (0 -> use subroutine arguments)

rst_freq=10 ! Restart file frequency

xmhd_mfnk=T ! Use matrix-free method

lin_tol=1.E-8 ! Linear solver tolerance

nl_tol=1.E-6 ! Non-linear solver tolerance

ittarget=30 ! Target for # of linear iterations per time step

xmhd_prefreq=20 ! Preconditioner update frequency

xmhd_nparts=0,20,40 ! Number of parts for local matrix decomposition

nl_update=3 ! # of NL iterations that causes preconditioner update

/

Solver specification

Time dependent MHD solvers are accelerated significantly by the use of a more sophisticated preconditioner than the default method. Below is an example oft_in.xml file that constructs an appropriate multi-grid preconditioner that uses GRMES+Block-Jacobi+LU on each level.

This solver can be used by specifying both the FORTRAN input and XML input files to the executable as below.

~$ ./MUG_slab_recon oft.in oft_in.xml

<oft>

<xmhd>

<pre type="mg">

<smoother direction="up">

<solver type="gmres">

<its>2</its>

<nrits>2</nrits>

<pre type="block_jacobi">

<nlocal>2</nlocal>

<solver type="lu"></solver>

</pre>

</solver>

</smoother>

</pre>

</xmhd>

</oft>

Post-Processing options

During the simulation the evolution of some global quantities is written to the history file xmhd.hist. A utility script (plot_mug_hist.py located in bin after installation) is included as part of OFT for plotting the signals in this file. This is useful for monitoring general progress of the simulation as well as numerical parameters like the iteration count and solver time. Using this script we can see the instability growth and relaxation of the initial magnetic equilibrium to the lowest energy state.

~$ python plot_mug_hist.py xmhd.hist

Creating plot files

To generate 3D plots, and perform additional diagnostic sampling (see oft_xmhd_probe), a plot run can be performed by setting plot_run=T in the slab_recon_options input group, which calls xmhd_plot. With this option additional run time options are available in the xmhd_plot_options group that control how restart files are sampled for plotting.

&xmhd_plot_options

t0=1.E-8

dt=1.E-6

rst_start=0

rst_end=1000

/

Once the post-processing run is complete bin/build_xdmf.py can be used to generate *.xmf files that can be loaded by VisIt, ParaView, or other visualization programs.

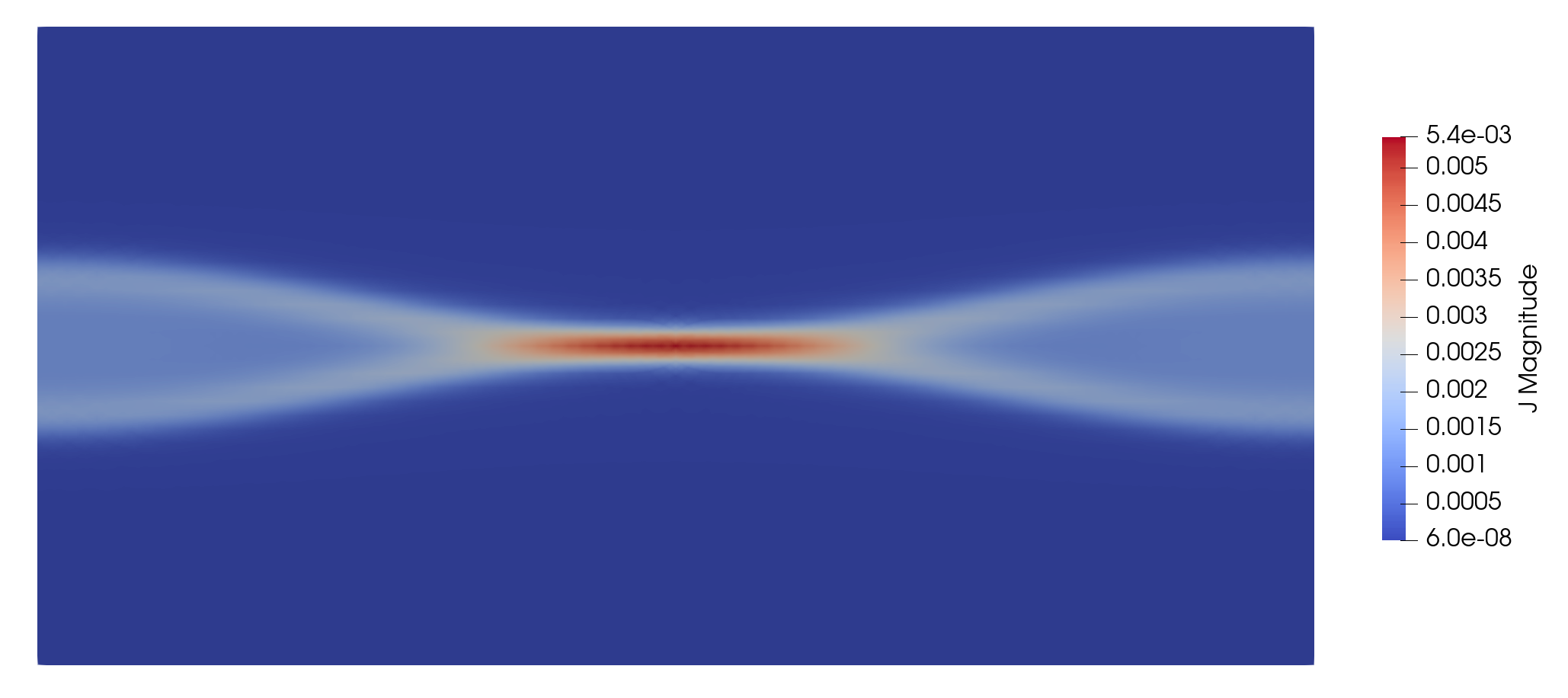

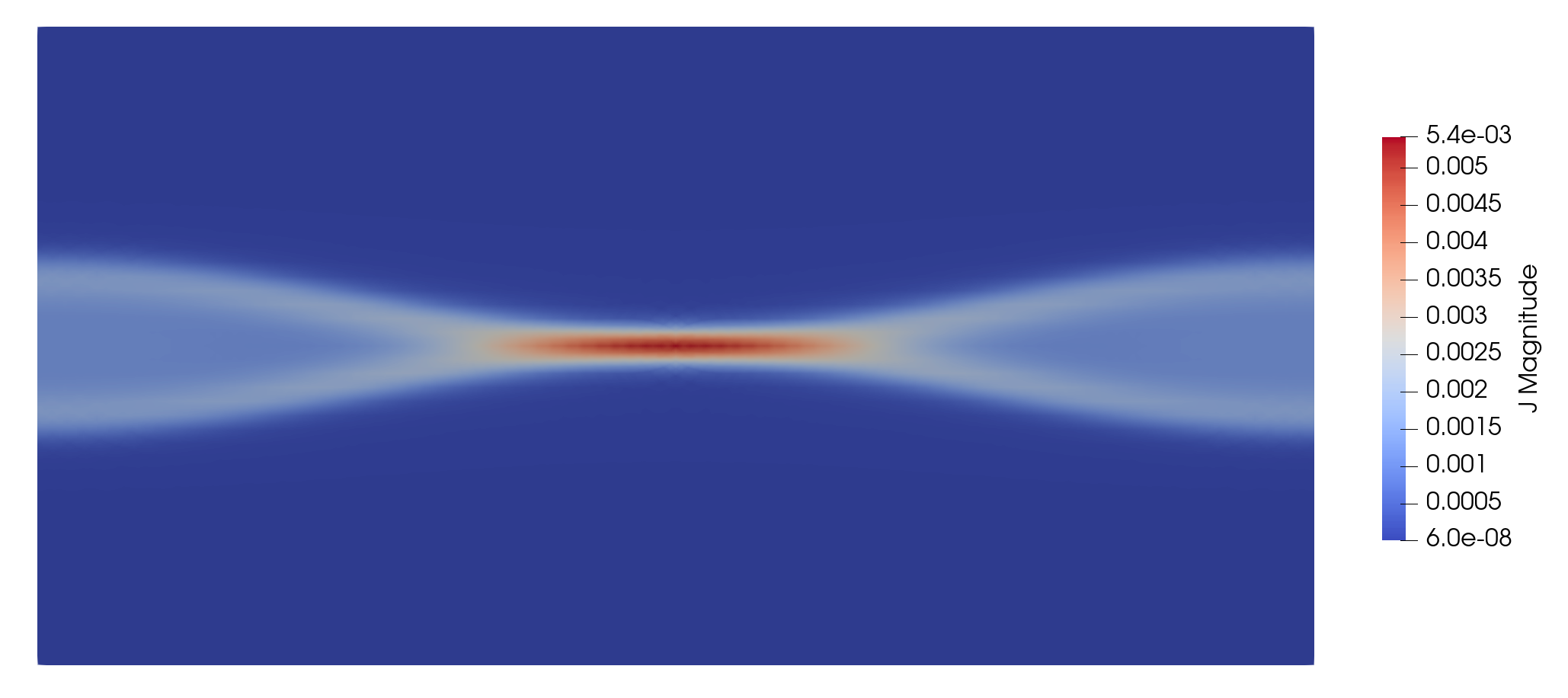

Resulting current distribution at final time

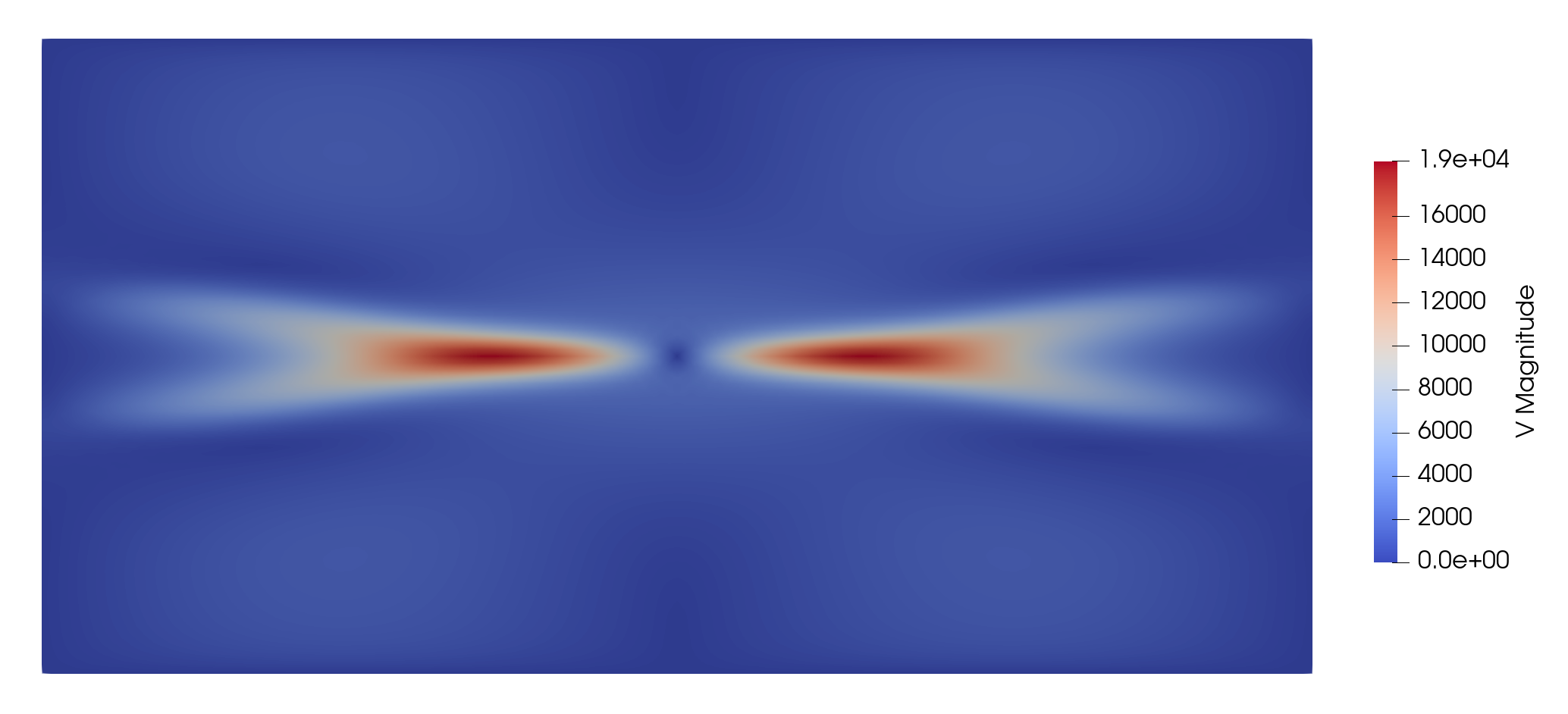

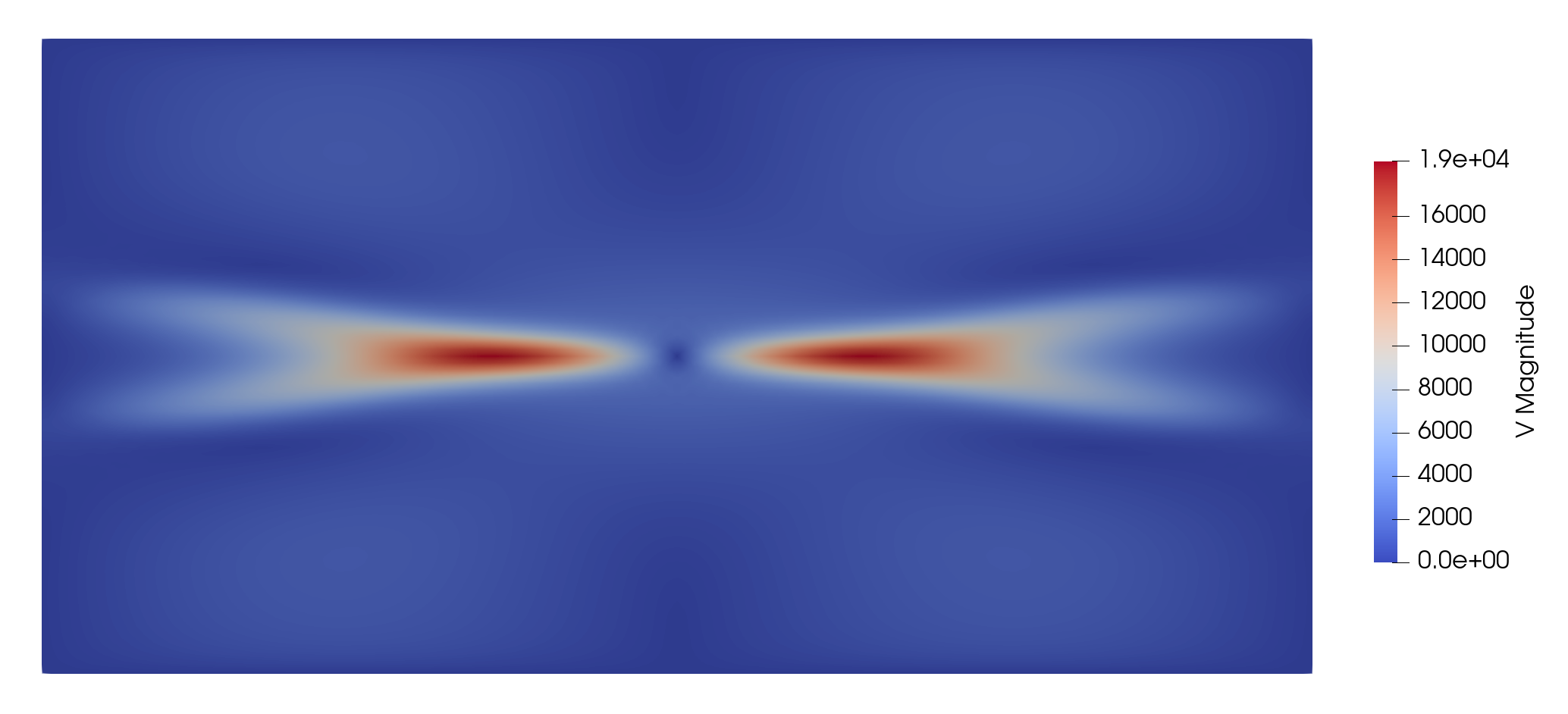

Resulting velocity magnitude distribution at final time

Complete Source

MODULE gem_helpers

IMPLICIT NONE

REAL(8) :: dpsi = 1.d-1

REAL(8) :: den_inf = 2.d-1

REAL(8) :: lam = 0.5d0

REAL(8) :: Lx = 25.6

REAL(8) :: Lz = 12.8

CHARACTER(LEN=2) :: field = 'n'

CONTAINS

PROCEDURE :: interp => gem_interp_apply

END TYPE gem_interp

INTEGER(4) :: io_unit

LOGICAL :: initialized = .false.

TYPE(oft_hcurl_grad_rinterp), POINTER :: Bfield => null()

TYPE(flux_probe) :: flux_probe

TYPE(oft_bin_file) :: flux_hist

CONTAINS

PROCEDURE :: apply => gem_probe_apply

END TYPE gem_probe

CONTAINS

SUBROUTINE gem_interp_apply(self,cell,f,gop,val)

CLASS(GEM_interp), INTENT(inout) :: self

INTEGER(4), INTENT(in) :: cell

REAL(8), INTENT(in) :: f(:)

REAL(8), INTENT(in) :: gop(3,4)

REAL(8), INTENT(out) :: val(:)

REAL(8) :: pt(3),Beq(3),Bper(3)

pt = self%mesh%log2phys(cell,f)

SELECT CASE(trim(self%field))

CASE('n0')

val = 1.d0/(cosh(pt(3)/self%lam))**2 + self%den_inf

CASE('b0')

beq = (/tanh(pt(3)/self%lam), 0.d0, 0.d0/)

val = beq

CASE('db')

bper(1) = -pi*cos(2.d0*pi*pt(1)/self%Lx)*sin(pi*pt(3)/self%Lz)/self%Lz

bper(2) = 0.d0

bper(3) = 2.d0*pi*sin(2.d0*pi*pt(1)/self%Lx)*cos(pi*pt(3)/self%Lz)/self%Lx

val = bper

CASE DEFAULT

CALL oft_abort(

'Unknown field component',

'GEM_interp_apply',__file__)

END SELECT

END SUBROUTINE gem_interp_apply

SUBROUTINE gem_probe_apply(self,sub_fields,t)

CLASS(GEM_probe), INTENT(inout) :: self

type(xmhd_sub_fields), intent(inout) :: sub_fields

REAL(8), INTENT(in) :: t

REAL(8) :: tflux

REAL(4), DIMENSION(2) :: output

IF(.NOT.self%initialized)THEN

ALLOCATE(self%Bfield)

self%flux_probe%hcpc=(/0.d0, 0.d0, 8.d0/)

self%flux_probe%hcpv=(/0.125d0, 0.0d0, 0.0d0/)

CALL self%flux_probe%setup

self%flux_probe%B=>self%Bfield

self%flux_hist%filedesc = 'GEM example reconnected flux'

CALL self%flux_hist%setup('gem_flux.hist')

CALL self%flux_hist%add_field('time', 'r4', desc="Simulation time [s]")

CALL self%flux_hist%add_field('flux', 'r4', desc="Reconnected flux [Wb]")

CALL self%flux_hist%write_header

END IF

self%initialized=.true.

END IF

self%Bfield%u=>sub_fields%B

CALL self%flux_probe%eval(tflux)

output=real([t,tflux],4)

CALL self%flux_hist%open

CALL self%flux_hist%write(data_r4=output)

CALL self%flux_hist%close

END IF

END SUBROUTINE gem_probe_apply

END MODULE gem_helpers

PROGRAM mug_slab_recon

USE gem_helpers, ONLY: gem_interp, gem_probe

IMPLICIT NONE

REAL(8), PARAMETER :: N0 = 5.196374d16

REAL(8), PARAMETER :: T0 = 1.d2

REAL(8), PARAMETER :: B0 = 0.002045692328575d0

CLASS(oft_solver), POINTER :: minv => null()

CLASS(oft_matrix), POINTER :: mop => null()

CLASS(oft_vector), POINTER :: u,v

TYPE(multigrid_mesh) :: mg_mesh

TYPE(xdmf_plot_file) :: plot_file

TYPE(xmhd_sub_fields) :: ic_fields,pert_fields

TYPE(oft_hcurl_grad_gzerop), TARGET :: hcurl_grad_gzerop

TYPE(oft_hcurl_grad_rinterp), TARGET :: bfield

TYPE(oft_hcurl_grad_cinterp), TARGET :: jfield

TYPE(GEM_interp), TARGET :: GEM_field

TYPE(GEM_probe) :: GEM_probes

REAL(8), POINTER :: vals(:),vec_vals(:,:)

INTEGER(4) :: i,ierr,io_unit

INTEGER(4) :: order = 2

INTEGER(4) :: minlev = 1

REAL(8) :: db = 1.d-1

LOGICAL :: pm = .false.

LOGICAL :: linear = .false.

LOGICAL :: plot_run = .false.

LOGICAL :: view_ic = .false.

NAMELIST/slab_recon_options/order,minlev,linear,db,plot_run,view_ic,pm

OPEN(newunit=io_unit,file=

oft_env%ifile)

READ(io_unit,slab_recon_options,iostat=ierr)

CLOSE(io_unit)

IF(view_ic)THEN

CALL plot_file%setup("gem")

CALL mg_mesh%mesh%setup_io(plot_file,order)

END IF

IF(plot_run)THEN

END IF

CALL ic_fields%Ti%set(t0)

CALL ic_fields%V%set(0.d0)

gem_field%mesh=>mg_mesh%mesh

NULLIFY(mop)

minv%A=>mop

minv%its=-2

gem_field%field='n0'

CALL u%set(0.d0)

CALL minv%apply(u,v)

CALL ic_fields%Ne%add(0.d0,1.d0,u)

CALL ic_fields%Ne%scale(n0)

CALL u%delete

CALL v%delete

CALL mop%delete

DEALLOCATE(u,v,mop)

CALL minv%pre%delete

DEALLOCATE(minv%pre)

CALL minv%delete

DEALLOCATE(minv)

NULLIFY(mop)

minv%A=>mop

minv%its=-2

DEALLOCATE(minv%pre%pre)

gem_field%field='b0'

CALL hcurl_grad_gzerop%apply(v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

CALL ic_fields%B%add(0.d0,1.d0,u)

CALL ic_fields%B%scale(b0)

gem_field%field='db'

CALL hcurl_grad_gzerop%apply(v)

CALL u%set(0.d0)

CALL minv%apply(u,v)

CALL pert_fields%B%add(0.d0,1.d0,u)

CALL pert_fields%B%scale(b0*db)

CALL u%delete

CALL v%delete

CALL mop%delete

DEALLOCATE(u,v,mop)

CALL minv%pre%pre%delete

DEALLOCATE(minv%pre%pre)

CALL minv%pre%delete

DEALLOCATE(minv%pre)

CALL minv%delete

DEALLOCATE(minv)

IF(view_ic)THEN

NULLIFY(vals)

ALLOCATE(vec_vals(3,ic_fields%Ne%n))

CALL ic_fields%Ne%get_local(vals)

CALL mg_mesh%mesh%save_vertex_scalar(vals,plot_file,'N0')

CALL ic_fields%Ti%get_local(vals)

CALL mg_mesh%mesh%save_vertex_scalar(vals,plot_file,'T0')

DO i=1,3

vals=>vec_vals(i,:)

CALL ic_fields%V%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'V0')

NULLIFY(mop)

minv%A=>mop

minv%its=-2

bfield%u=>ic_fields%B

CALL u%set(0.d0)

CALL minv%apply(u,v)

DO i=1,3

vals=>vec_vals(i,:)

CALL u%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'B0')

bfield%u=>pert_fields%B

CALL u%set(0.d0)

CALL minv%apply(u,v)

DO i=1,3

vals=>vec_vals(i,:)

CALL u%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'dB')

jfield%u=>ic_fields%B

CALL u%set(0.d0)

CALL minv%apply(u,v)

DO i=1,3

vals=>vec_vals(i,:)

CALL u%get_local(vals,i)

END DO

CALL mg_mesh%mesh%save_vertex_vector(vec_vals,plot_file,'J0')

CALL u%delete

CALL v%delete

CALL mop%delete

DEALLOCATE(u,v,mop)

CALL minv%pre%delete

DEALLOCATE(minv%pre)

CALL minv%delete

DEALLOCATE(minv)

END IF

IF(linear)THEN

ELSE

CALL ic_fields%B%add(1.d0,1.d0,pert_fields%B)

END IF

END PROGRAM MUG_slab_recon